Colorado justices decide pre-2025 law did not criminalize AI-generated child porn

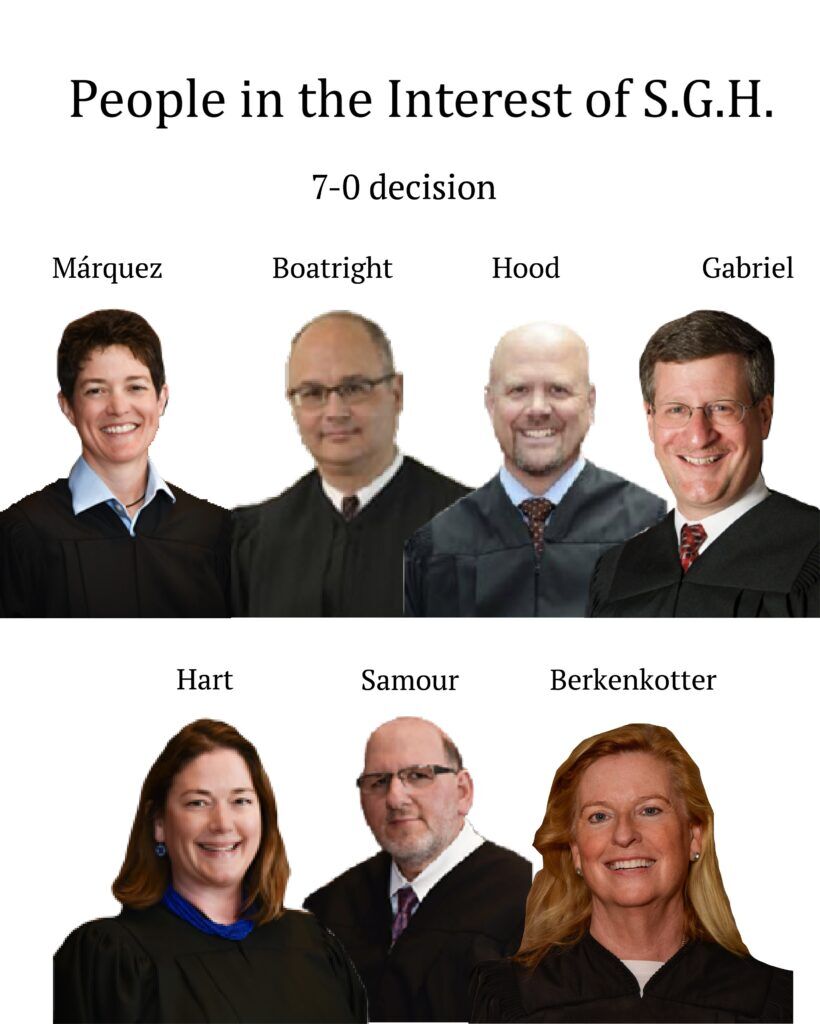

Colorado law prior to 2025 did not criminalize, as a means of sexually exploiting a child, the use of artificial intelligence to generate nude images depicting real children, the state Supreme Court concluded on Monday.

The legislature acted this year to clearly establish a crime for someone to have or share fake, yet “highly realistic,” images of children that are explicitly sexual. However, the Supreme Court was asked to decide whether a teenager’s 2023 creation of “deepfake” porn was also illegal at the time.

No, it was not, the court concluded.

“Unfortunately, it took our legislature time to catch up to the recent advances in generative-AI technology,” wrote Justice Carlos A. Samour Jr. in the Oct. 13 opinion. Therefore, the defendant “cannot be penalized based on statutory provisions that came into effect after he allegedly engaged in the appalling conduct in question. As undesirable as it may be to deprive the named victims of their day in court in this proceeding, it is the result the law requires and thus the one we reach.”

Prosecutors in Morgan County filed charges against a Weldon Valley High School student, identified as S.G.H., for allegedly taking pictures of three female children and generating realistic deepfaked images of them with visible genitalia.

The charges for sexual exploitation of a child revolved around the existence of “sexually exploitative material.” A trial judge declined to dismiss the charges, believing the pre-2025 definition of the phrase encompassed S.G.H.’s alleged digital creation of explicit content using the faces of identifiable children.

During the appeal to the Supreme Court, prosecutor Madison M. Linton argued that the deepfakes drew from “real human intimate parts” to generate the simulated child bodies — something more realistic than superimposing a new body on an existing head.

Defense attorney Michael S. Juba characterized the government’s position as “if it’s bad AI, then it’s not a crime. But if it’s good AI, then that’s where the crime falls.”

Samour, during oral arguments, observed the pre-2025 law said nothing about realism.

“I just have a hard time believing that when the legislature passed that law, they were thinking about this particular scenario,” he said. “It seems to me they were not. There was a gap. And that gap was identified in 2025.”

Ultimately, the court agreed that the General Assembly’s amendments to the law this year were an indication that S.G.H.’s alleged conduct fell in the “gap” that existed previously.

Samour explained that the AI-generated material did not amount to “photographs,” nor was it “reproduced.” He also rejected the prosecution’s argument that the 2025 changes were clarifications of the existing law, rather than changes to it.

“But that’s a hard sell,” he wrote. “They changed it by contemporizing it based on advances in generative-AI technology.”

The case is People in the Interest of S.G.H.